The Bias-Variance Trade-off

Introduction to Statistical Learning - PISE

Ca’ Foscari University of Venice

This unit will cover the following topics:

- Simple linear regression

- Polynomial regression

- Bias-variance trade-off

- Cross-validationYesterday’s and tomorrow’s data

The signal and the noise

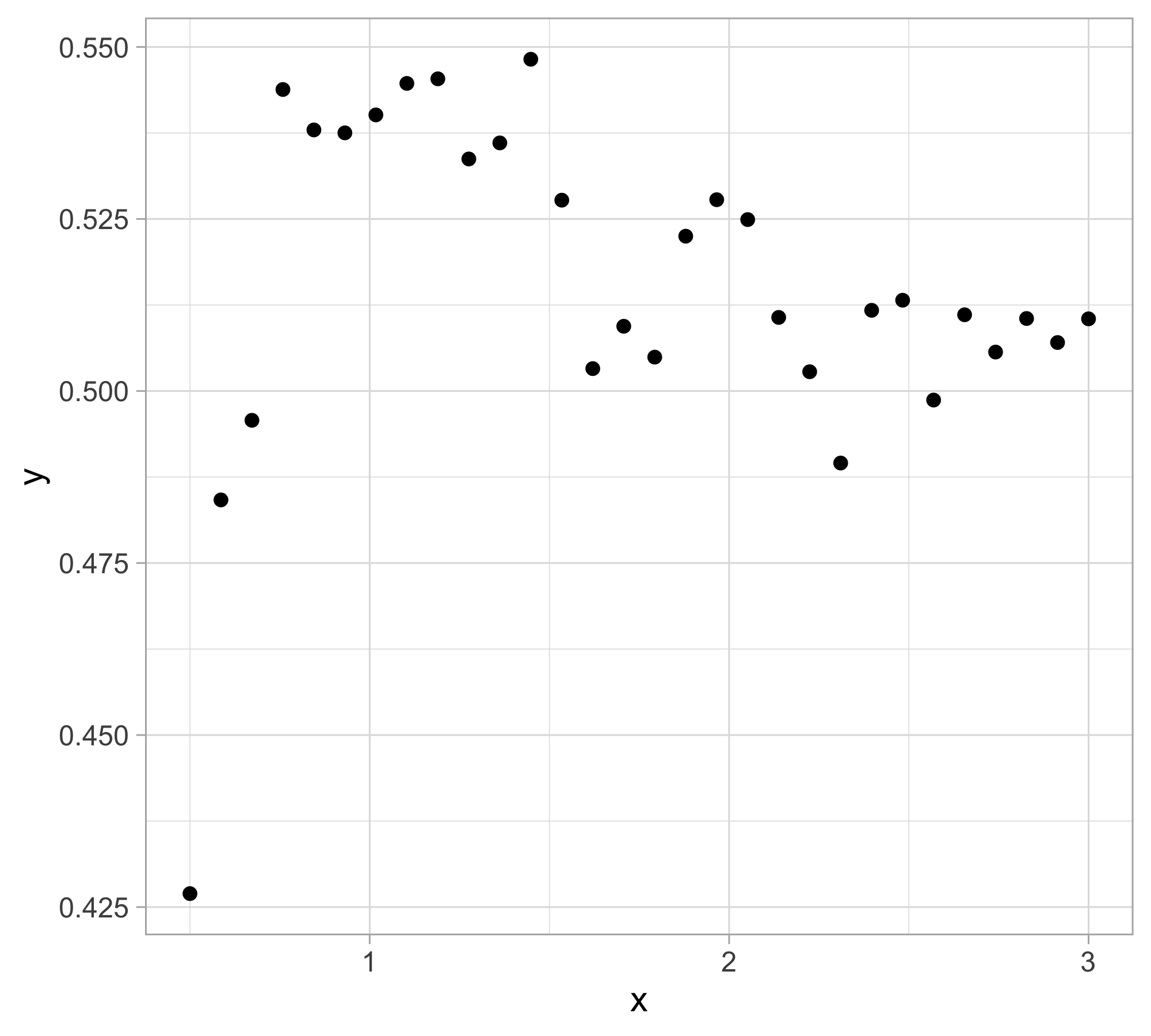

Let us presume that yesterday we observed n = 30 pairs of data (x_i, y_i).

Data were generated according to Y_i = f(x_i) + \epsilon_i, \quad i=1,\dots,n, with each y_i being the realization of Y_i.

The \epsilon_1,\dots,\epsilon_n are iid “error” terms, such that \mathbb{E}(\epsilon_i)=0 and \mathbb{V}\text{ar}(\epsilon_i)=\sigma^2 = 10^{-4}.

Here f(x) is a regression function (signal) that we leave unspecified .

Tomorrow we will get a new x. We wish to predict Y.

Yesterday’s data

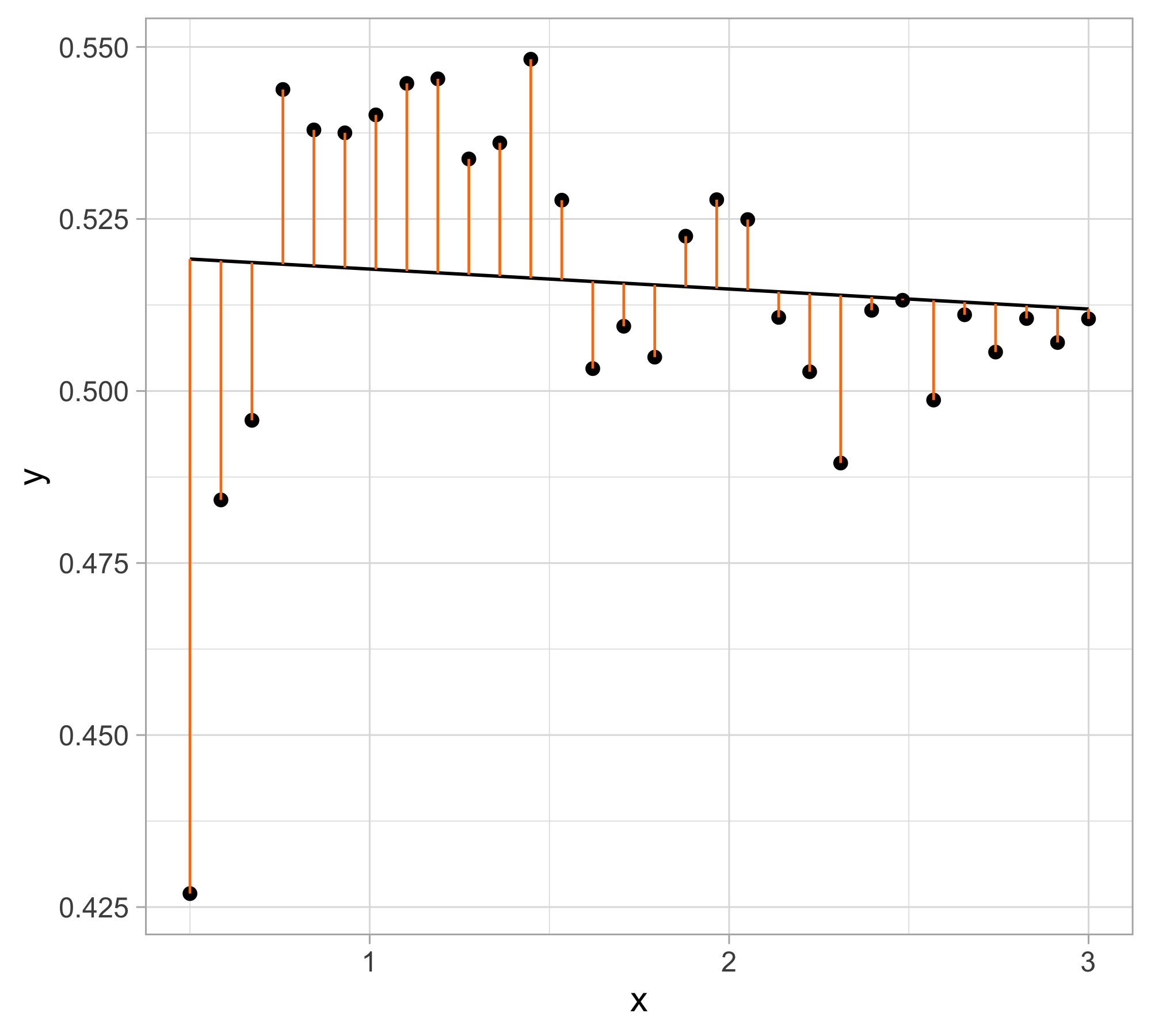

Simple linear regression

The function f(x) is unknown, therefore, it should be estimated.

A simple approach is using simple linear regression: f(x; \beta) = \beta_0 + \beta_1 x, namely f(x) is approximated with a straight line where \beta_0 and \beta_1 are two unknown constants that represent the intercept and slope, also known as coefficients or parameters

Giving some estimates \hat{\beta}_0 and \hat{\beta}_1 for the model coefficients, we predict future values using \hat{y} = \hat\beta_0 + \hat\beta_1 x where \hat{y} indicates a prediction of Y on the basis of X=x. The hat symbol denotes an estimated value.

Estimation of the parameters by least squares

Let \hat y_i = \hat\beta_0 + \hat\beta_1 x_i be the prediction for Y based on the ith value of X. Then e_i = y_i - \hat y_i represents the ith residual

We define the residual sum of squares (\mathrm{RSS}) as \mathrm{RSS} = e_1^2+e_2^2+\ldots+e_n^2, or equivalently as \mathrm{RSS} = (y_1 - \hat\beta_0 - \hat\beta_1 x_1)^2+(y_2 - \hat\beta_0 - \hat\beta_1 x_2)^2+\ldots+ (y_n- \hat\beta_0 - \hat\beta_1 x_n)^2.

The least squares approach chooses \hat{\beta}_0 and \hat{\beta}_1 to minimize the . The minimizing values can be shown to be \begin{aligned} \hat{\beta}_1 &= \frac{\sum_{i=1}^{n}(x_i - \bar x)(y_i - \bar y)}{\sum_{i=1}^{n}(x_i - \bar x)^2}\\ \hat{\beta}_0 &= \bar y - \beta_1 \bar x \end{aligned} where \bar y = \frac{1}{n}\sum_{i=1}^n y_i and \bar x = \frac{1}{n}\sum_{i=1}^n x_i are the sample means.

Least squares fit : \hat y = 0.520627 - 0.003 x

Assessing the Overall Accuracy of the Model

We compute the mean squared error (\mathrm{MSE}) \mathrm{MSE} = \frac{1}{n} \sum_{i=1}^{n}(y_i - \hat{y}_i)^2 = \frac{1}{n}\mathrm{RSS}

R-squared or fraction of variance explained is R^2 = \frac{\mathrm{TSS} - \mathrm{RSS}}{\mathrm{TSS}} = 1 - \frac{\mathrm{RSS}}{\mathrm{TSS}} where \mathrm{TSS} = \sum_{i=1}^{n}(y_i - \bar y)^2 is the total sum of squares.

| Quantity | Value |

|---|---|

| RSS | 0.0171726 |

| MSE | 0.00057242 |

| TSS | 0.01731363 |

| R^2 | 0.008146 |

Polynomial regression

The function f(x) is unknown, therefore, it should be estimated.

A simple approach is using polynomial regression: f(x; \beta) = \beta_0 + \beta_1 x + \beta_2 x^2 + \cdots + \beta_d x^{d}, namely f(x) is approximated with a polynomial of degree d

This model is linear in the parameters: ordinary least squares can be applied.

How do we choose the degree of the polynomial d?

Without clear guidance, in principle, any value of d \in \{0,\dots,n-1\} could be appropriate.

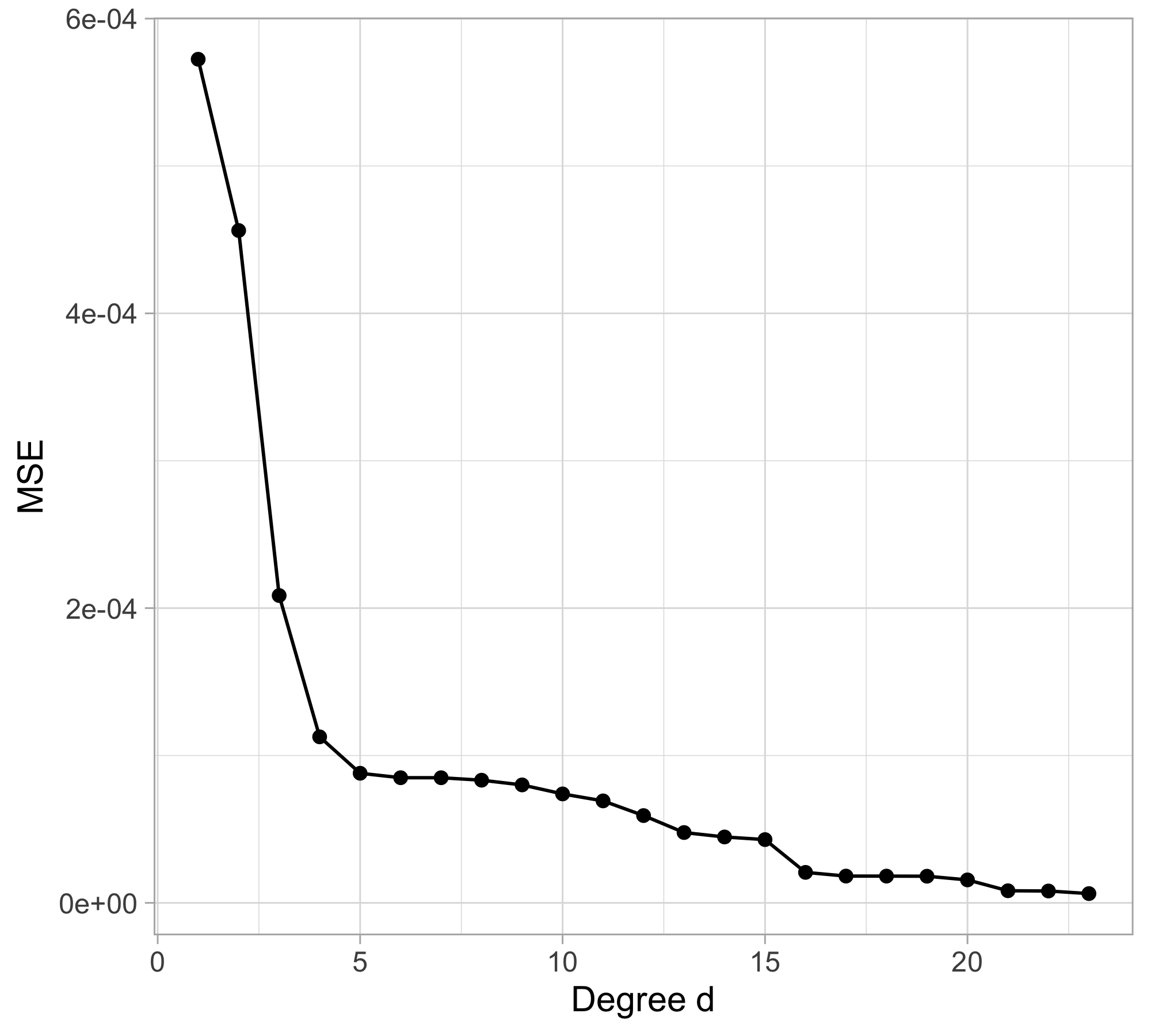

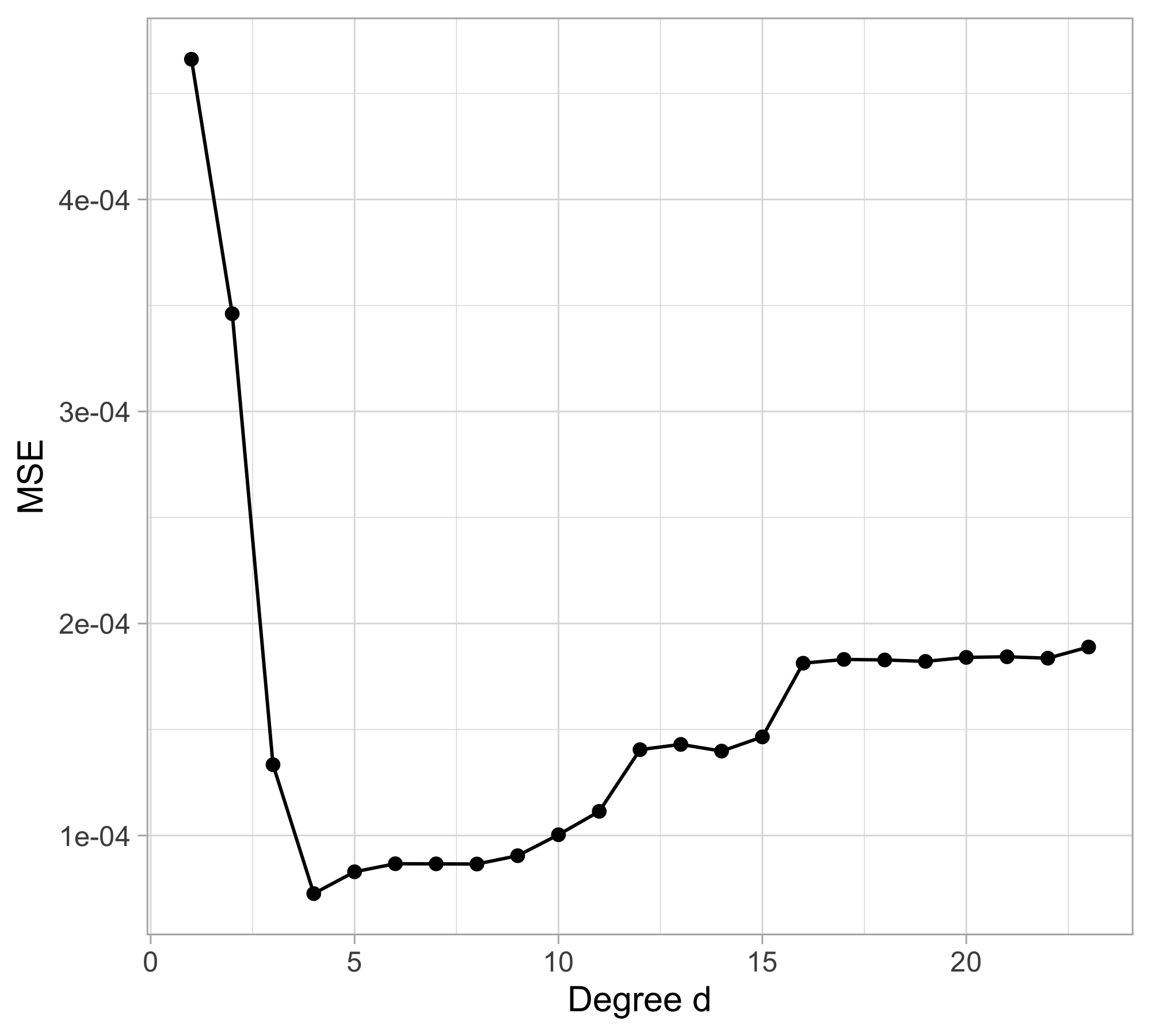

Let us compare the mean squared error (MSE) on yesterday’s data (training) \text{MSE}_{\text{train}} = \frac{1}{n}\sum_{i=1}^n\{y_i -f(x_i; \hat{\beta})\}^2, or alternatively R^2_\text{train}, for different values of d…

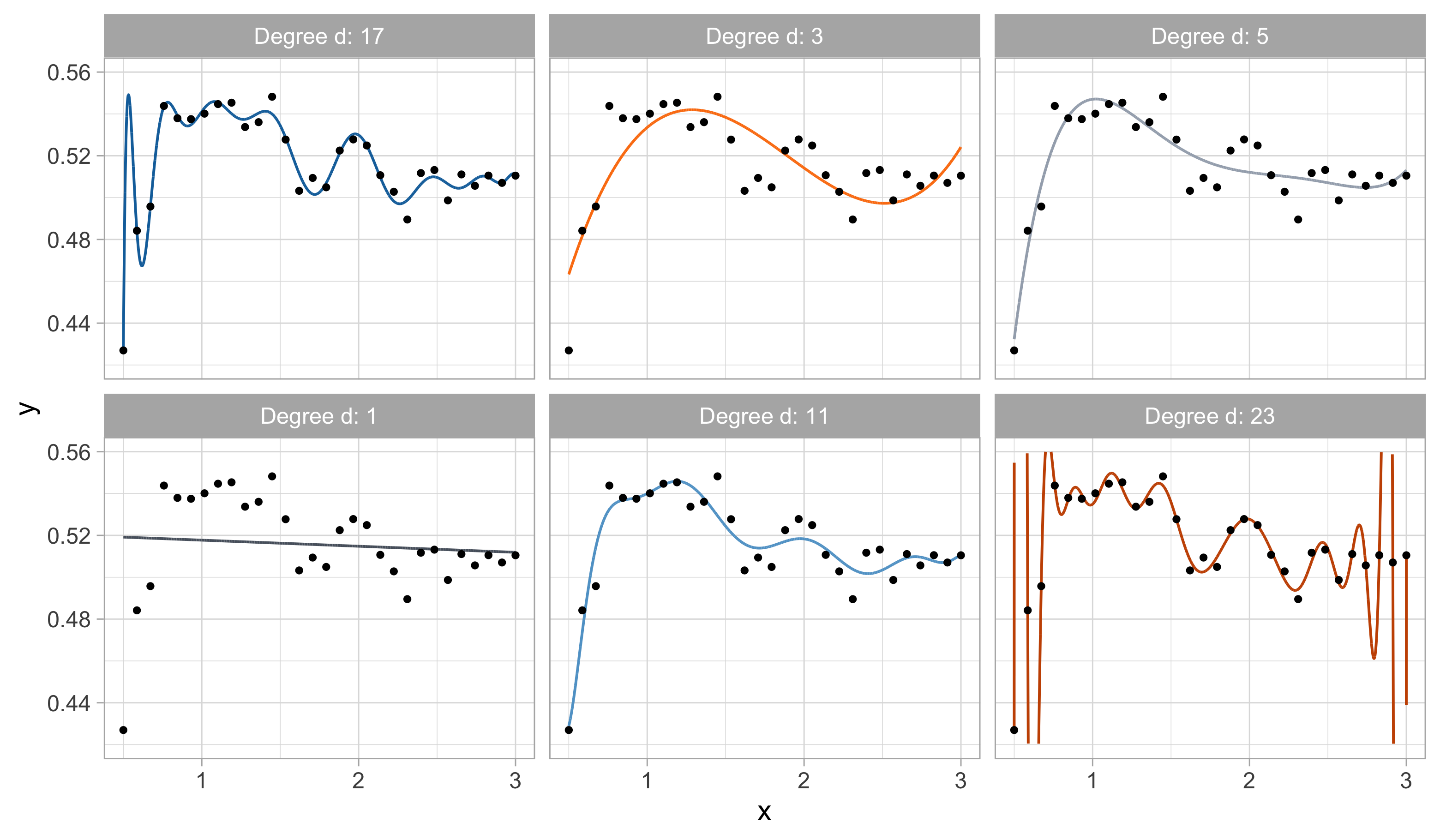

Yesterday’s data, polynomial regression

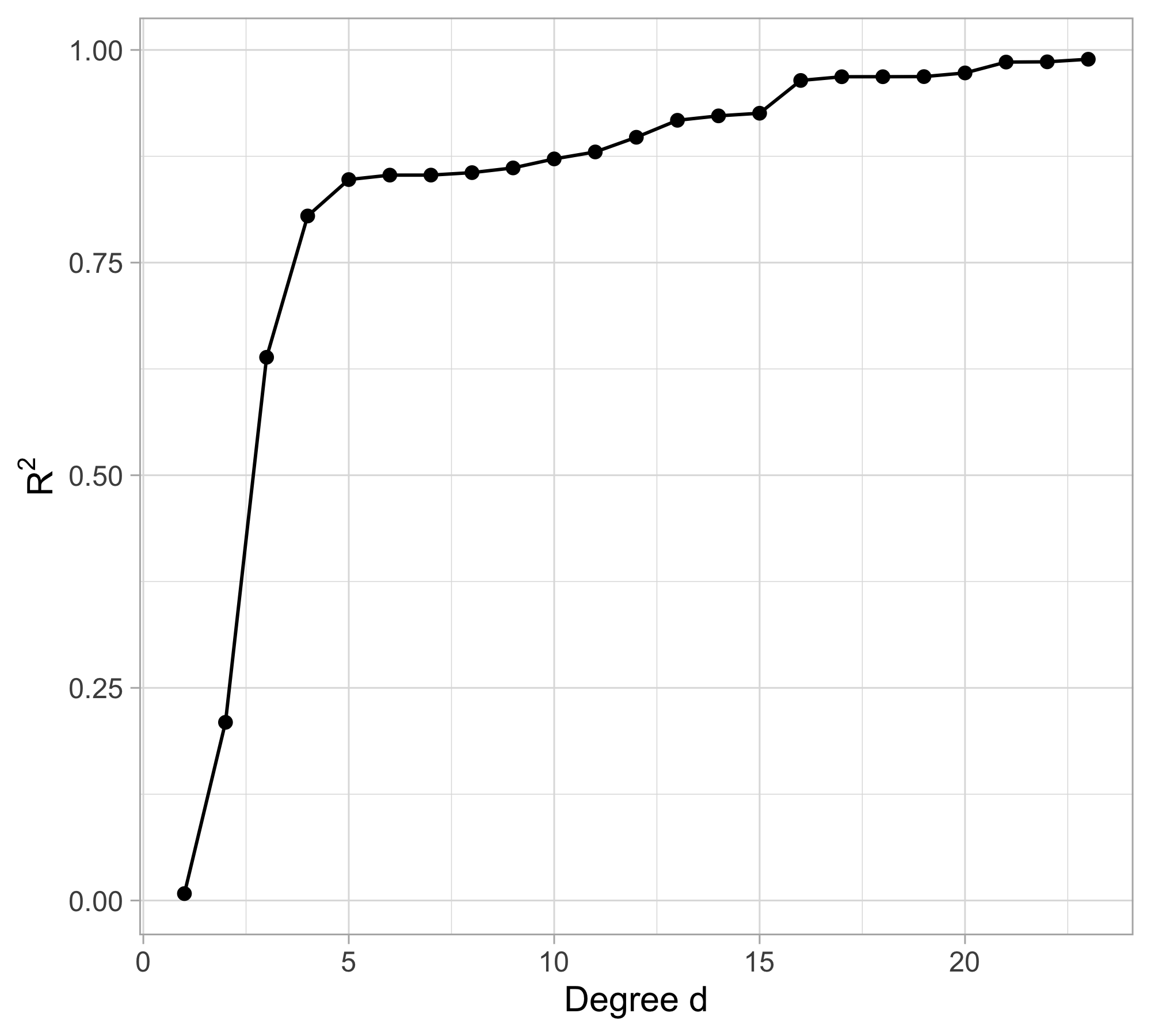

Yesterday’s data, goodness of fit

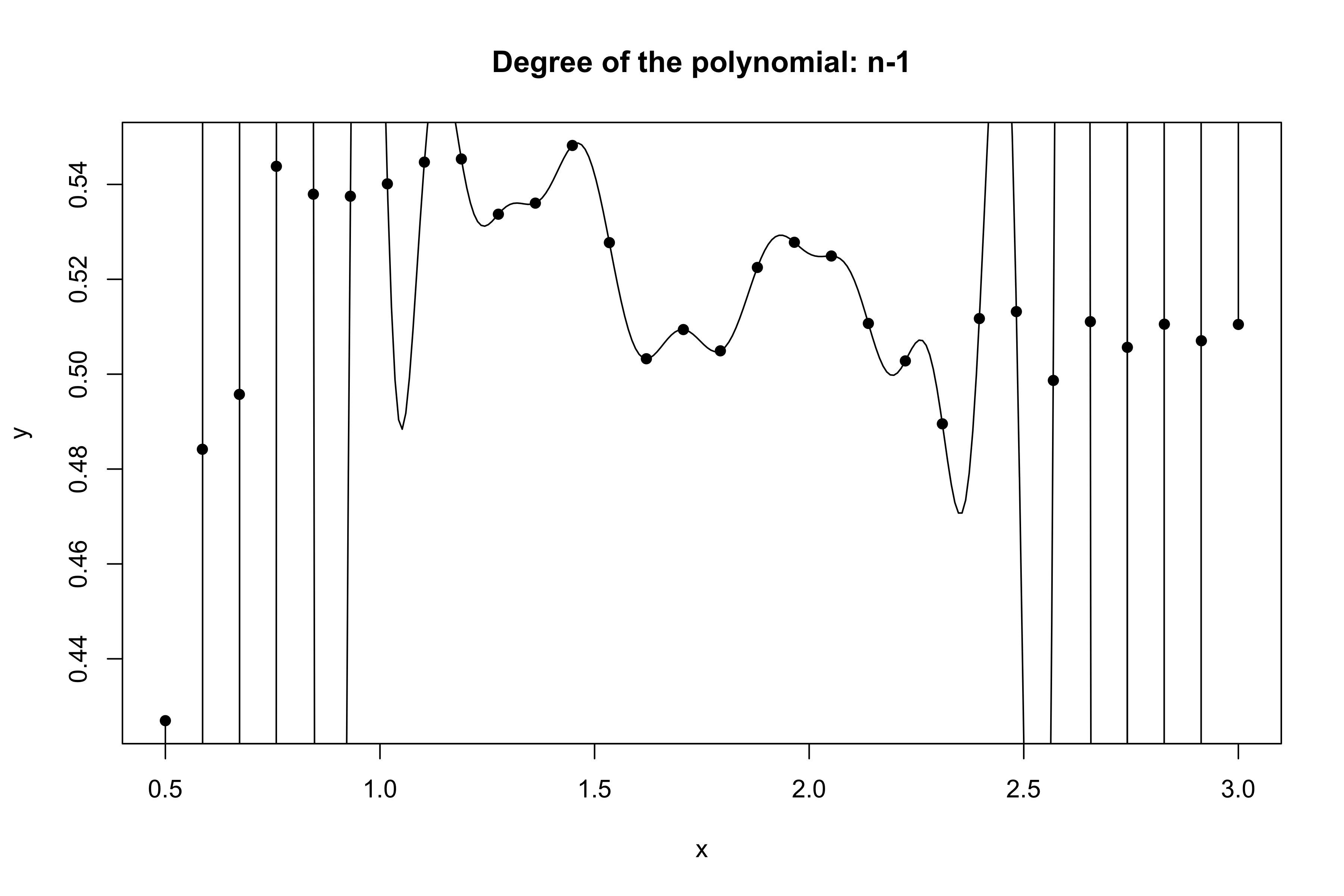

Yesterday’s data, polynomial interpolation (d = n-1)

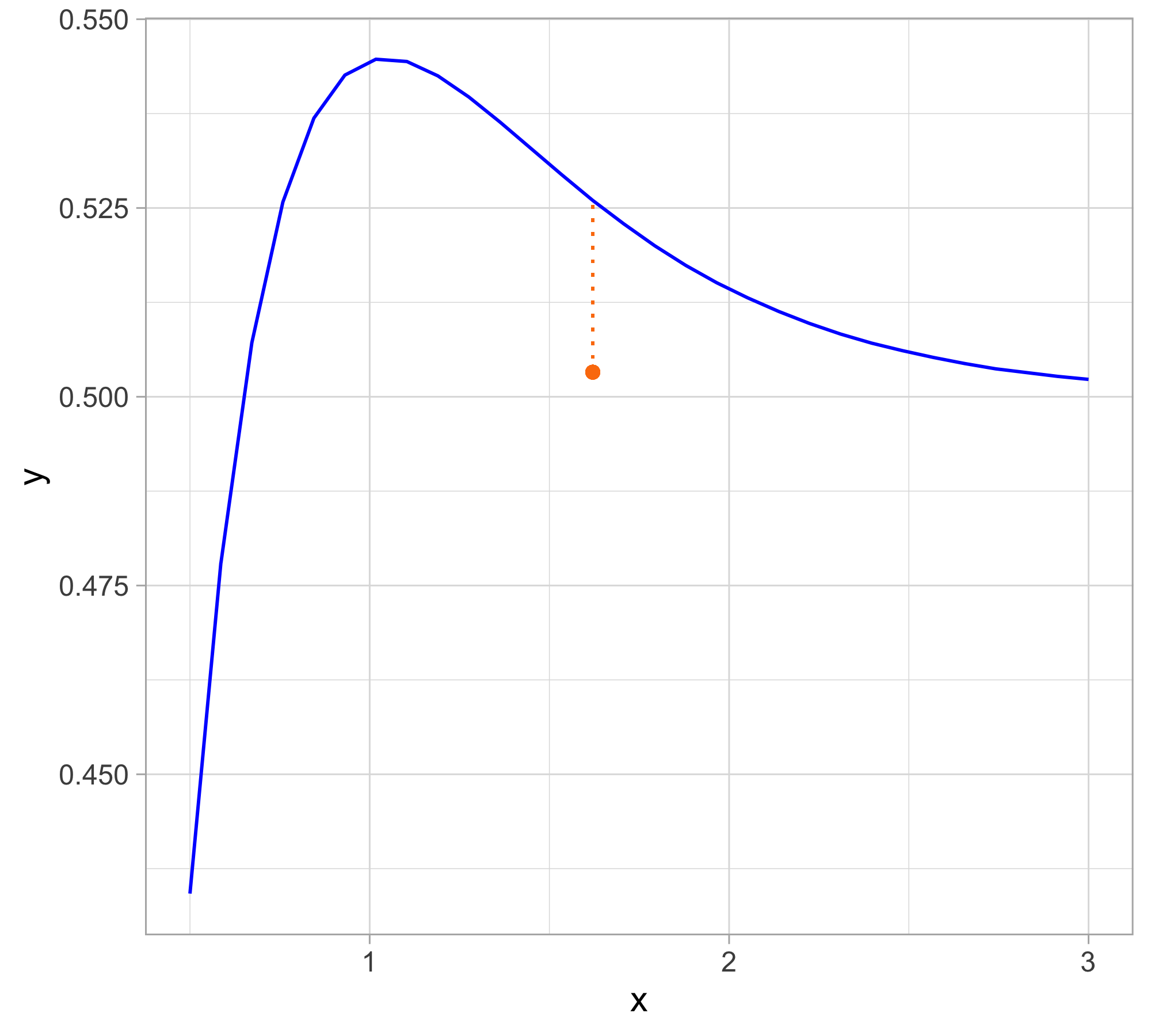

Yesterday’s data, tomorrow’s prediction

The MSE decreases as the number of parameter increases; similarly, the R^2 increases as a function of d. It can be proved that this always happens using ordinary least squares.

One might be tempted to let d as large as possible to make the model more flexible…

Taking this reasoning to the extreme would lead to the choice d = n-1, so that \text{MSE}_\text{train} = 0, \qquad R^2_\text{train} = 1, i.e., a perfect fit. This procedure is called interpolation.

However, we are not interested in predicting yesterday data. Our goal is to predict tomorrow’s data, i.e. a new set of n = 30 points: (x_1, \tilde{y}_1), \dots, (x_n, \tilde{y}_n), using \hat{y}_i = f(x_i; \hat{\beta}), where \hat{\beta} is obtained using yesterday’s data.

Remark. Tomorrow’s r.v. \tilde{Y}_1,\dots, \tilde{Y}_n follow the same scheme as yesterday’s data.

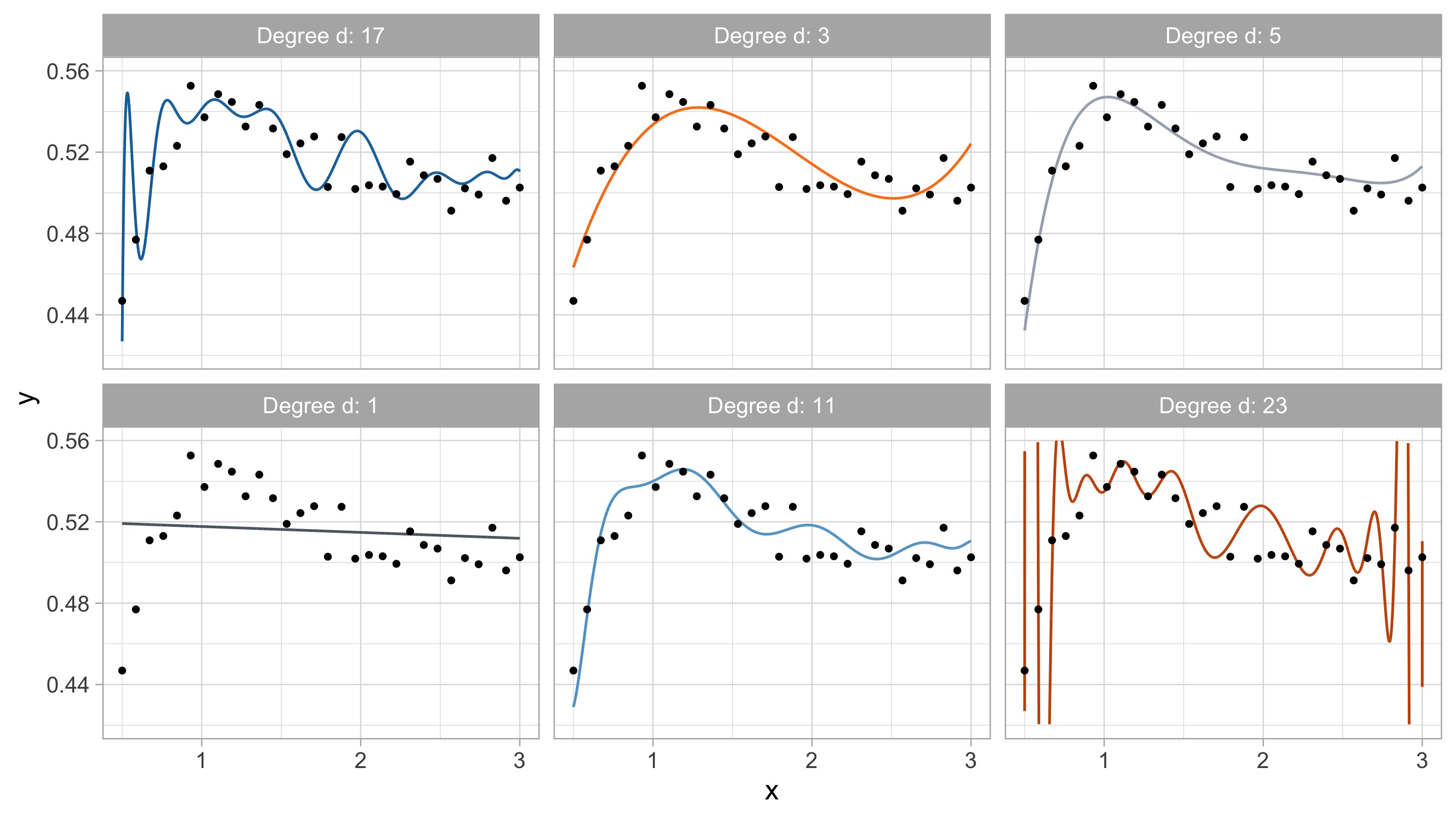

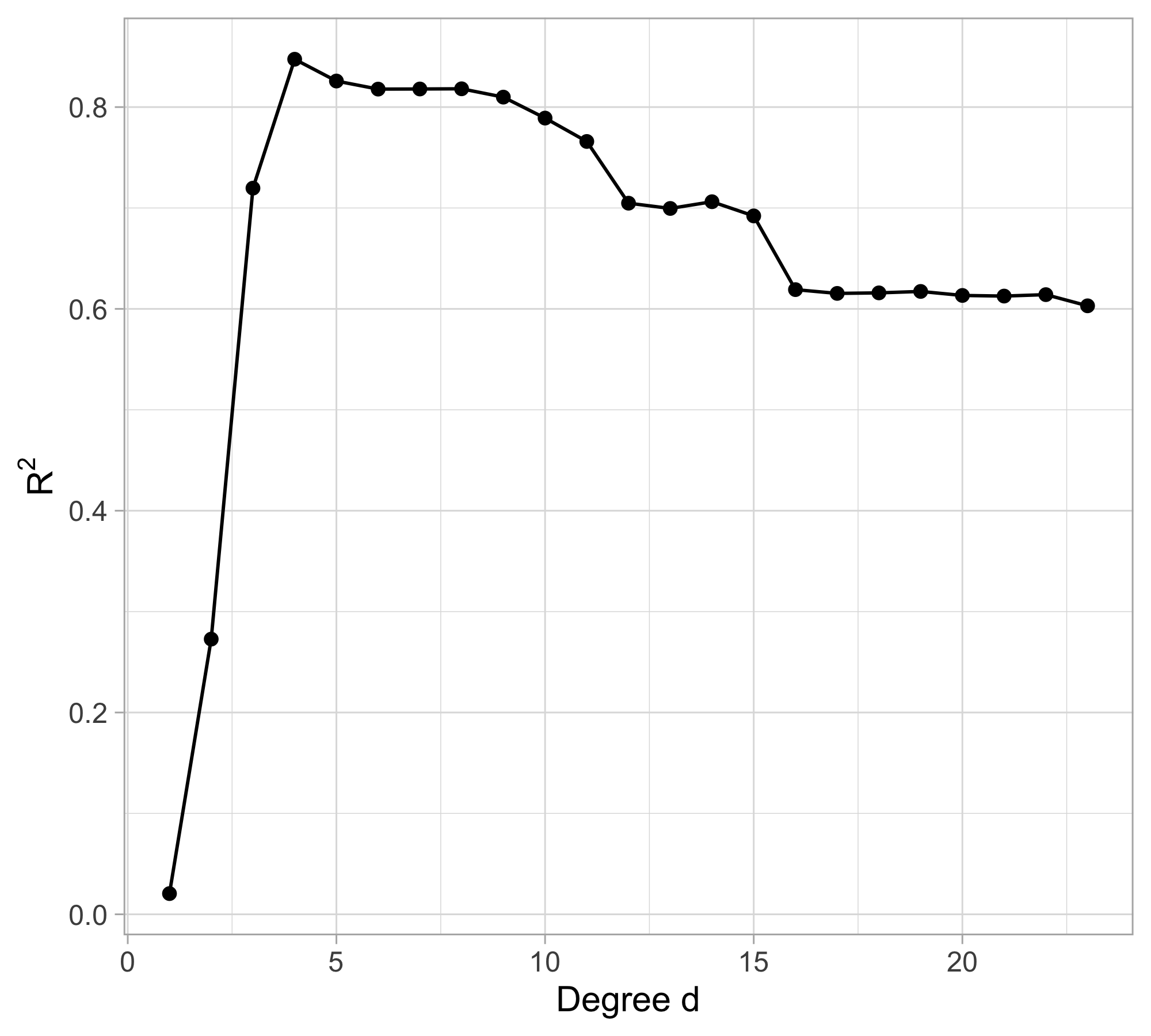

Tomorrow’s data, polynomial regression

Tomorrow’s data, goodness of fit

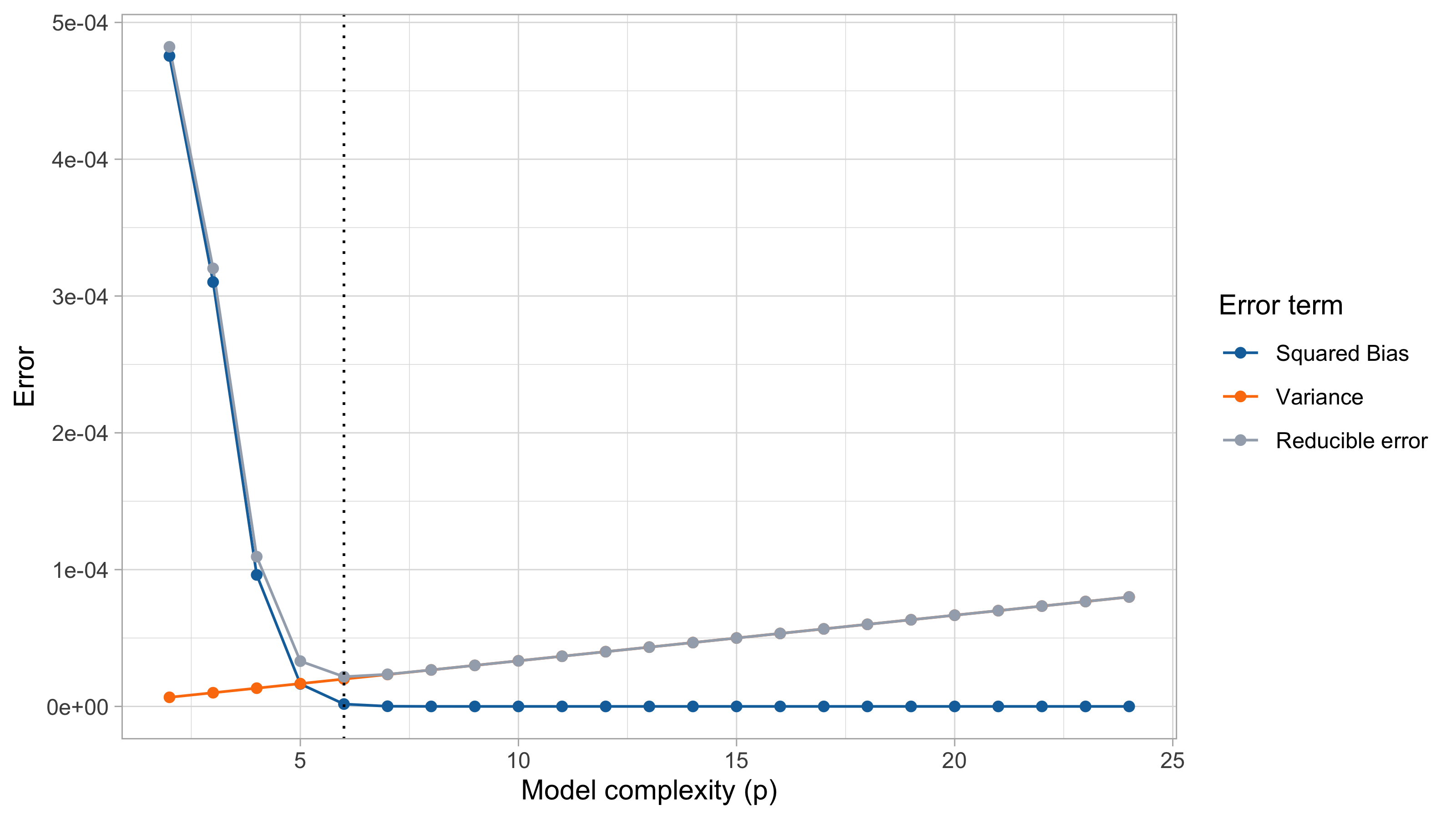

The Bias-Variance Trade-Off

If we knew f(x)…

Bias-variance trade-off

When p grows, the mean squared error first decreases and then it increases. In the example, the theoretical optimum is p = 6 (5th degree polynomial).

The bias measures the ability of \hat{f}(\bm{x}) to reconstruct the true f(\bm{x}). The bias is due to lack of knowledge of the data-generating mechanism. It equals zero when \mathbb{E}\{\hat{f}(\bm{x})\} = f(\bm{x}).

The bias term can be reduced by increasing the flexibility of the model (e.g., by considering a high value for p).

The variance measures the variability of the estimator \hat{f}(\bm{x}) and its tendency to follow random fluctuations of the data.

The variance increases with the model complexity.

It is not possible to minimize both the bias and the variance, there is a trade-off.

We say that an estimator is overfitting the data if an increase in variance comes without important gains in terms of bias.

But since we do not know f(x)…

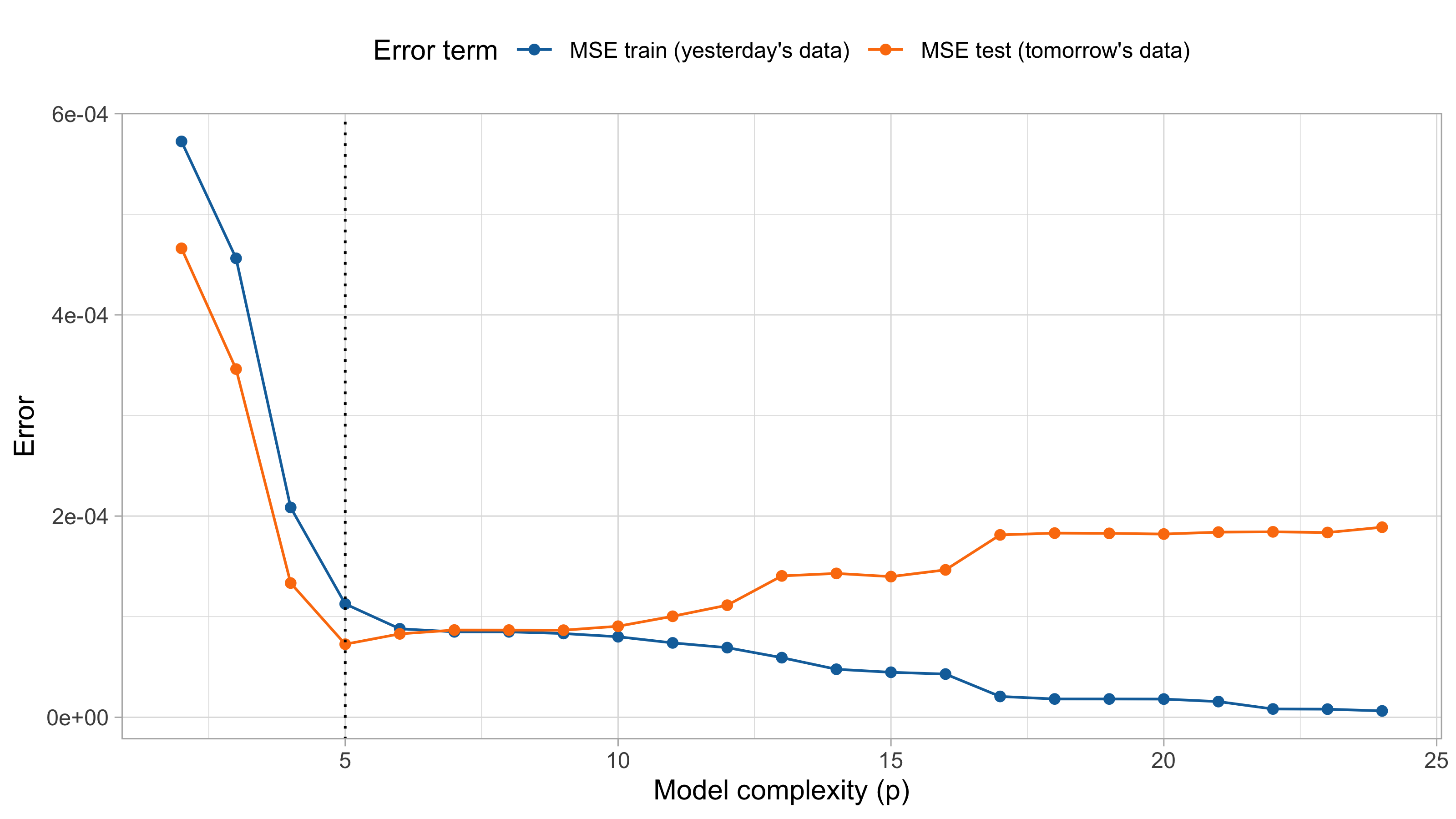

We just concluded that we must expect a trade-off between error and variance components. In practice, however, we cannot do this because, of course, f(x) is unknown.

A simple solution consists indeed in splitting the observations in two parts: a training set (y_1,\dots,y_n) and a test set (\tilde{y}_1,\dots,\tilde{y}_n), having the same covariates x_1,\dots,x_n.

We fit the model \hat{f} using n observations of the training and we use it to predict the n observations on the test set.

This leads to an unbiased estimate of the in-sample prediction error, i.e.: \widehat{\mathrm{ErrF}} = \frac{1}{n}\sum_{i=1}^n\mathscr{L}\{\tilde{y}_i; \hat{f}(\bm{x}_i)\}.

This is precisely what we already did with yesterday’s and tomorrow’s data!

MSE on training and test set (recap)

Comments and remarks

The mean squared error on tomorrow’s data (test) is defined as \text{MSE}_{\text{test}} = \frac{1}{n}\sum_{i=1}^n\{\tilde{y}_i -f(x_i; \hat{\beta})\}^2, and similarly the R^2_\text{test}. We would like the \text{MSE}_{\text{test}} to be as small as possible.

For small values of d, an increase in the degree of the polynomial improves the fit. In other words, at the beginning, both the \text{MSE}_{\text{train}} and the \text{MSE}_{\text{test}} decrease.

For larger values of d, the improvement gradually ceases, and the polynomial follows random fluctuations in yesterday’s data, which are not observed in the new sample.

An over-adaptation to yesterday’s data is called overfitting, which occurs when the training \text{MSE}_{\text{train}} is low but the test \text{MSE}_{\text{test}} is high.

Yesterday’s dataset is available from the website of the textbook Azzalini and Scarpa. (2013):